On-premise LLMs: Toward Autonomous Conversational AI

1. How it all began

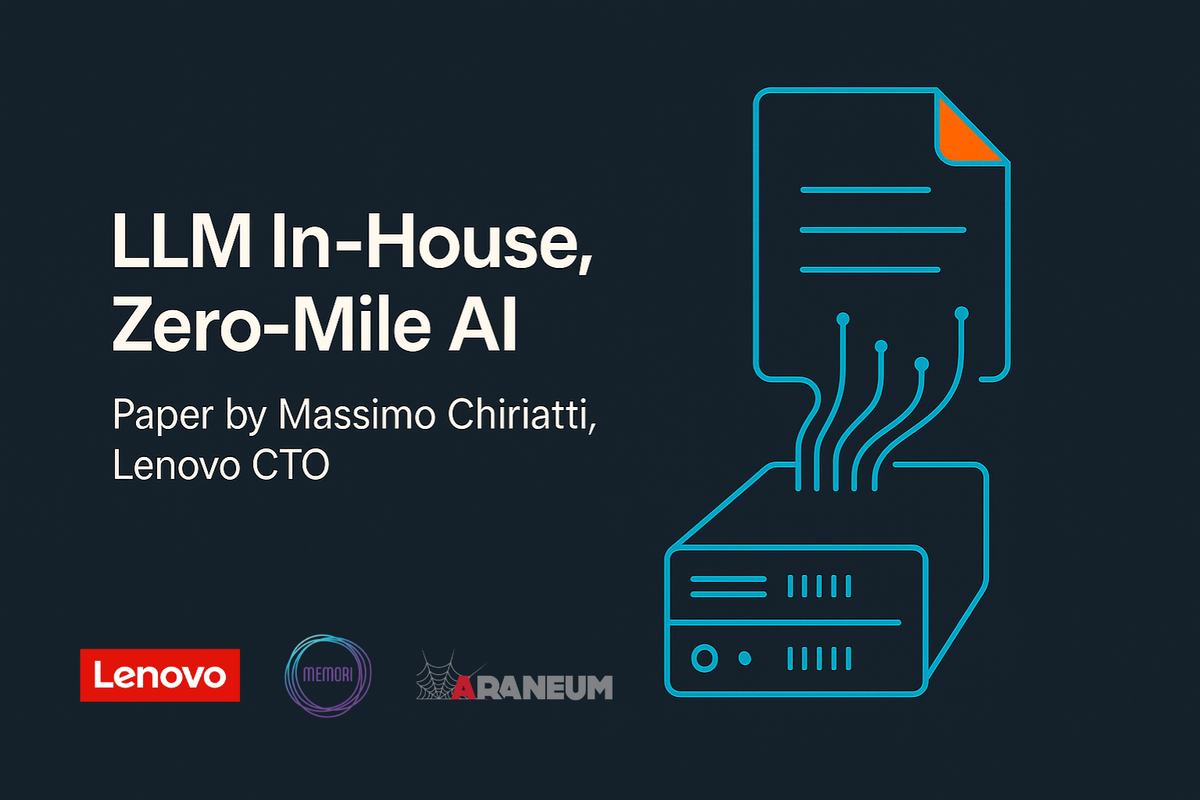

When, at the end of 2024, Memori and Araneum helped Lenovo assemble its first server dedicated to LLMs, the idea was straightforward: see whether an open-source model could withstand a real company’s workload without relying on the cloud.

That pilot, described in our first report, drew plenty of attention.

Six months later we went back to the lab—this time with a more powerful machine, more mature models and a more ambitious goal: bringing enterprise-grade conversational AI directly into customers’ data-centres.

That effort produced Massimo Chiriatti’s second paper, written with support from the Memori and Araneum teams.

2. Why move AI “in-house”? — three clear ideas

After the first pilot (late 2024) it was no longer enough to know that the local LLM worked; we wanted to make clear why bringing it on-premise is truly worthwhile.

There are several strategic reasons that justify an on-premise choice beyond simple cost comparisons:

- Full control over data privacy – On-premise solutions ensure that sensitive corporate data never passes through third-party systems. As highlighted in the report’s foreword, this approach “avoids dependence on external providers, preserves the confidentiality of sensitive data and eliminates unmanageable service disruptions.”

- Technological autonomy – Direct control of the infrastructure guarantees operational continuity even in critical scenarios, without being subject to external providers’ policy changes or limits.

- Regulatory compliance – In highly regulated sectors (e.g. healthcare, finance, public administration) full control over data and its processing is essential to meet requirements such as GDPR and other sector-specific rules.

- Optimisation for enterprise workloads – A dedicated infrastructure can be tuned precisely to an organisation’s usage patterns, delivering optimal performance for its specific use cases.

The joint studies by Memori and Lenovo aim to give companies tools for deciding when investment in proprietary infrastructure becomes advantageous versus variable online costs, creating a complete decision framework that accounts for both economic factors and strategic data sovereignty.

These four points form the red thread linking the 2024 pilot to the hardware described in Section 3 and to the new Board-of-Experts architecture.

Looking for the numbers?

Section 6 provides concrete throughput, latency and cost figures showing how much on-premise really changes the game.

3. Lenovo hardware

Lenovo supplied a ThinkSystem SR675 V3 fitted with two NVIDIA H200 GPUs, 120 GB each. In lay terms: enough VRAM to run 70-billion-parameter models with a 128 k-token context window—without painful swapping.

In practice that means you can serve 200 concurrent users without ping going crazy and still deliver sub-second replies on mid-size models.

4. Model selection: bigger isn’t always better

We put the most-awaited open-source releases on the bench:

- Qwen 3 (0.6 B → 32 B)

- Llama 3.3 (up to 70 B)

- Gemma 3-27B-IT

- IBM Granite 3.3

The real sweet spot for mixed enterprise workloads is Qwen 3-14B: it uses only 27 GB of VRAM, keeps latency low and—above all—avoids hallucinations, something sub-8 B models still suffer from.

Llama 70 B is unbeatable for quality, but its resource hunger means you reserve it for perfect copy or deep reasoning, not everyday FAQs.

IBM Granite 3.3-8B also shone (~85 req/s): perfect when you need lots of concurrency on little VRAM.

5. Inside the Board of Experts: the invisible orchestra that makes AI act

A single general-purpose model can handle surface questions but struggles when the user dives into detail (e.g. “What’s the part-number for the Legion 5 Pro 16 ACH6?”).

We needed specialised knowledge without forcing the customer to talk to ten different chatbots. Hence the Board of Experts: a manager of multiple AI Agents, each focused on its own domain.

5.1 The structure: one conductor, four sections

| Role | What it does | Example trigger |

|---|---|---|

| NOVA (President) | Receives every message, interprets it, chooses the best-suited agent. | “I’m looking for a 15-inch gaming laptop” → routes to Consumer |

| Consumer | IdeaPad & desktop lines, Yoga, Legion, LOQ; consumer monitors. | Questions on prices, consumer P/Ns, gaming requirements |

| Commercial | ThinkPad range, workstations, business monitors. | “Need a rugged laptop for engineers on site” |

| Motorola | Razr, Edge, Moto g/e, ThinkPhone smartphones. | Queries on smartphone promos or business bundles |

| Services & Warranties | Warranty extensions, after-sales services, Lenovo software. | “How much is a three-year Premier Support Plus extension?” |

Each agent has its own “role prompt” and can call targeted functions (fresh HTML datasets) only when it lacks up-to-date info. That keeps prompts compact and cuts hallucination risk.

5.2 Why users really care

- Seamless dialogue – NOVA decides who speaks: the user asks once and gets prices, warranties and availability in sequence, no window-switching.

- Chat-level speed – Compact prompts and smart routing keep wait times minimal.

- Live information – Every agent queries live price lists and tech sheets; no stale data or manual copy-paste.

- Transparent scalability – With Qwen 3-14B the system handles 65+ requests/s, serving hundreds of users at once with no queues.

What does the user see? A single, fluid chat. Behind the scenes the question may jump from an 8 B LLM (Motorola) to 14 B (Consumer) or even 70 B (Commercial) in milliseconds—always on the same on-prem server.

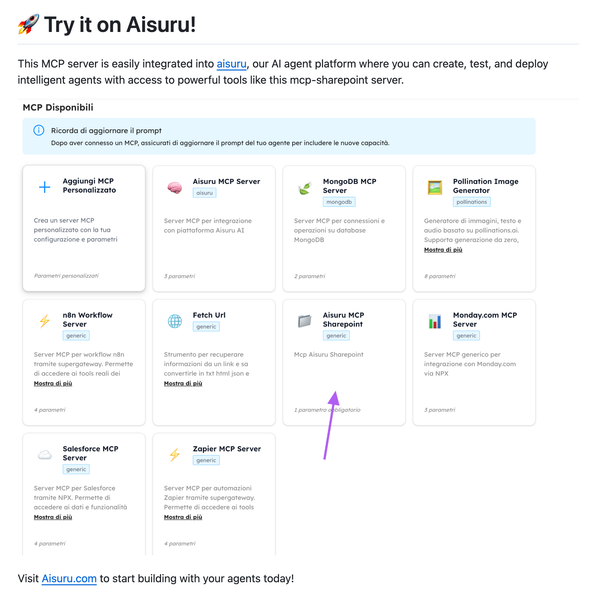

👉 Want to try it?

Write to demo@memori.ai and book a live demo of the Board of Experts—one of AIsuru’s gems.

Everything happens in a few hundredths of a second, orchestrated by AIsuru with no complicated prompts or manual integrations.

6. What we actually measured

- 12 realistic conversations from Lenovo (quotes, P/N questions, product comparisons).

- Throughput benchmarks with vLLM using 53 000 ShareGPT chats (fixed 128-token output).

- Stress tests with Apache JMeter: 50 KB prompts, ramp-up to 200 users.

The numbers that matter—and what they mean

| Metric | What it is | Measured value | Why it matters |

|---|---|---|---|

| Throughput | Simultaneous requests handled | 65 req/s (Qwen 3-14B) 35 req/s (Qwen 3-32B) | Real scalability: supports up to 200 concurrent users without service degradation |

| Performance boost | Improvement vs. previous setup | +300 % overall speed | Immediate ROI: triple capacity with the same licence spend |

| Response time | Generating complete answers (128 tokens) | 14–17 s average for detailed outputs | Professional UX: acceptable time for technical analyses |

| Technical reliability | Accuracy on critical data (Part Numbers) | Zero hallucinations with models ≥ 14B | Commercial credibility: the agent doesn’t invent SKUs or specs |

Bottom line: your sales team can rely fully on AI answers, and customers get accurate information even during traffic spikes.

7. Three lessons we learned

- “Medium” models are the new standard

No need to jump straight to 70 B: with 14 B parameters you already have an assistant that understands, doesn’t hallucinate and answers quickly. - Function calling is indispensable

Linking the agent to live tabular sources (product sheets, price lists) halves hallucinations and lightens prompts. AIsuru excels at optimising inference here. - On-prem really scales

Up-front cost aside, the H200 + vLLM combo handles traffic spikes you used to pay per token for—without network surprises.

8. What’s next?

- Download the full paper (in Italian)→ available at this LINK.

- Book a 30-minute demo with the AIsuru team → write to demo@memori.ai to see AIsuru conducting a Board of Experts.

- Kick-off your project → discuss generative-AI opportunities with the Memori team and see how they fit your business.

Conclusion

The cloud isn’t going away, but now you can decide which conversations stay “at home” and make them run up to three times faster than before.

With Lenovo providing hardware, Araneum handling methodology and benchmarks, AIsuru orchestrating agents and our know-how on open-source models, on-prem conversational AI is no longer experimental: it’s production-ready.